Understanding Amundsen Data Catalog: Architecture, Features & Installation

Share this article

Amundsen Data Catalog is a powerful open-source solution for data discovery and governance.

See How Atlan Simplifies Data Cataloging – Start Product Tour

Created by Lyft, it simplifies the process of finding and managing data across various sources. With its user-friendly interface and robust features,

Amundsen enables organizations to enhance data visibility and compliance. This catalog is essential for teams looking to improve their data management practices.

Amundsen data catalog: The origin story

Permalink to “Amundsen data catalog: The origin story”Amundsen was born out of a need for solving data discovery and data governance at Lyft. Soon after starting Amundsen’s journey within Lyft, it was open-sourced in October 2019. It has grown in popularity and adoption since then.

This article will take a deep dive into Amundsen’s architecture, features, and use cases. In the end, we’ll also talk about other open-source alternatives to Amundsen. But before that, let’s discuss why and how Amundsen was created.

Table of contents

Permalink to “Table of contents”- Amundsen data catalog: The origin story

- Architecture

- Features

- How to set up Amundsen

- Open-source alternatives to Amundsen

- How organizations making the most out of their data using Atlan

- Conclusion

- FAQs about Amundsen Data Catalog

- Related reads

Is Amundsen open source?

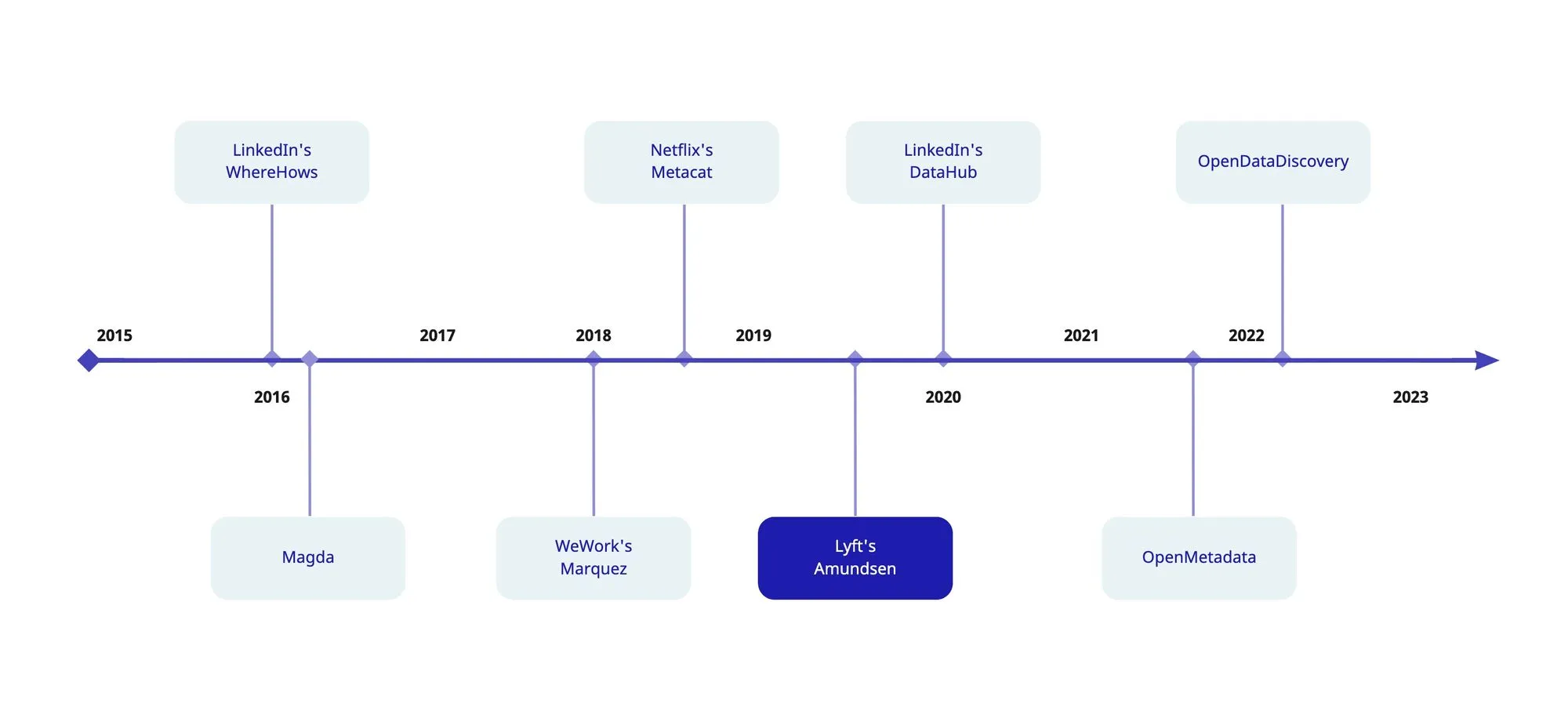

Permalink to “Is Amundsen open source?”Yes, Amundsen was open-sourced in 2019, but before that, there were several open-source data catalogs such as Apache Atlas, WeWork’s Marquez, and Netflix’s Metacat, among others. Some of these tools were created to suit certain data stacks, and the rest were not very flexible.

Here’s the timeline to give you an idea of where Lyft’s Amundsen came in compared to other open-source data catalogs:

Timeline showing the release of open-source data catalog tools. Image by Atlan.

With Amundsen, the engineering team at Lyft decided to look at the problem of data discovery and governance from a fresh approach using a flexible microservice-based architecture. With this architecture, you could replace many of the components based on your preferences and requirements, which made it enticing for many businesses.

Over the last few years, data catalogs have made life easier for engineering and business teams by enabling data discovery and governance across data sources, targets, business teams, and hierarchies.

With time, data catalogs are building newer features, such as data lineage, profiling, data quality, and more, to enable various businesses to benefit from the tools. Amundsen’s story isn’t much different. Let’s begin by understanding how Amundsen is architected and how it works.

Amundsen Data Catalog Demo

Permalink to “Amundsen Data Catalog Demo”Here’s a hosted demo environment that should give you a fair sense of the Lyft Amundsen data catalog platform.

Amundsen architecture

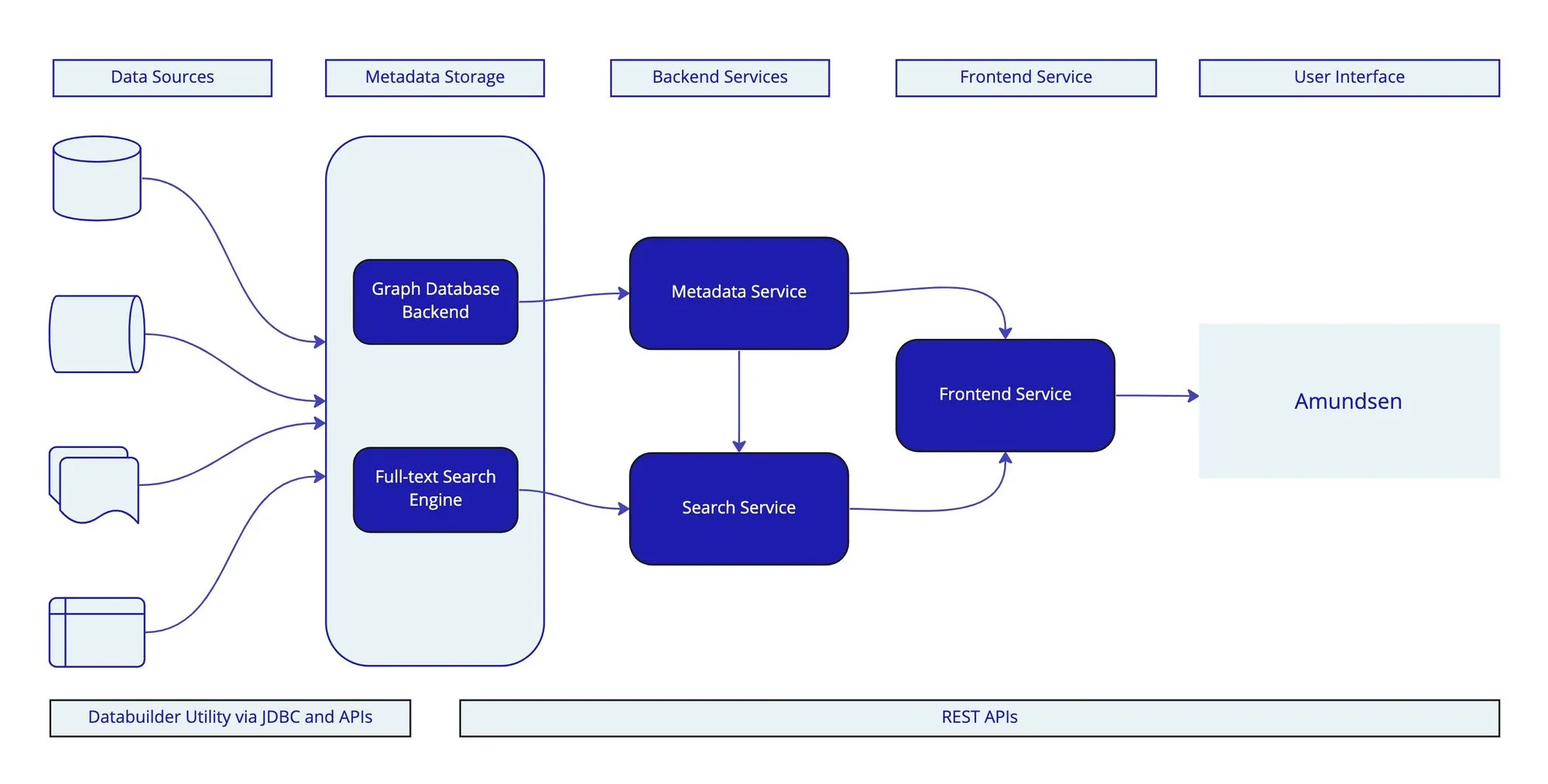

Permalink to “Amundsen architecture”Amundsen’s architecture comprises four major components.

- Metadata service

- Search Service

- Frontend service

- Databuilder utility

Specific technologies back these components out of the box, but there’s enough flexibility to use drop-in or almost drop-in replacements with some customizations.

As with every application, Amundsen has backend and frontend components.

The backend comprises the metadata and search services — different types of data stored back both. The frontend component has only one service, which is the frontend service.

Amundsen also has a library that helps you connect to different sources and targets for metadata management. This library is collectively called the databuilder utility. Before discussing the services in detail, let’s look at the following diagram depicting Amundsen’s architecture:

Schematic representation of Amundsen architecture. Image by Atlan.

1. Metadata service

Permalink to “1. Metadata service”The metadata service provides a way of communicating with the database that stores the actual metadata that backs your data catalog. This might be the technical metadata stored in information_schema in most databases, or it might be business-context data or lineage metadata.

As mentioned in the article, Amundsen was created to be more flexible than earlier avatars of data catalogs; the API is designed to support different databases for storing the metadata. The metadata service is exposed via a REST API. Engineers and business users can use this API to interact programmatically or via the front end with Amundsen, respectively.

Like many other data catalogs, Amundsen’s default choice is neo4j, and you can use proprietary graph databases like AWS Neptune or even different data catalogs like Apache Atlas. The databuilder utility, which we will discuss later, provides a way to integrate Amundsen with Atlas.

from apache_atlas.client.base_client import AtlasClient

from databuilder.types.atlas import AtlasEntityInitializer

client = AtlasClient('<http://localhost:21000>', ('admin', 'admin'))

init = AtlasEntityInitializer(client)

init.create_required_entities()

Here’s what the job configuration for loading and publishing a CSV extract from Atlas to Amundsen will look like:

job_config = ConfigFactory.from_dict({

f'loader.filesystem_csv_atlas.{FsAtlasCSVLoader.ENTITY_DIR_PATH}': f'{tmp_folder}/entities',

f'loader.filesystem_csv_atlas.{FsAtlasCSVLoader.RELATIONSHIP_DIR_PATH}': f'{tmp_folder}/relationships',

f'publisher.atlas_csv_publisher.{AtlasCSVPublisher.ATLAS_CLIENT}': AtlasClient('<http://localhost:21000>', ('admin', 'admin')) ,

f'publisher.atlas_csv_publisher.{AtlasCSVPublisher.ENTITY_DIR_PATH}': f'{tmp_folder}/entities',

f'publisher.atlas_csv_publisher.{AtlasCSVPublisher.RELATIONSHIP_DIR_PATH}': f'{tmp_folder}/relationships',

f'publisher.atlas_csv_publisher.{AtlasCSVPublisher.ATLAS_ENTITY_CREATE_BATCH_SIZE}': 10,

f'publisher.atlas_csv_publisher.{AtlasCSVPublisher.REGISTER_ENTITY_TYPES}': True

})

The early documentation of Amundsen suggested that a backend like MySQL could also be used for storing the metadata. With the official documentation entirely outdated, there’s no in-depth tutorial on how to do that.

2. Search Service

Permalink to “2. Search Service”The search service is to serve the data search and discovery feature. A full-text search backend helps business users get fast search results from the data catalog. The search service indexes the same data already stored in the persistent storage by the metadata service. Like the metadata service, the search service is also exposed via a search API for querying the technical metadata, business-context metadata, and so on.

Amundsen’s default search backend is Elasticsearch, but you can use other engines like AWS OpenSearch, Algolia, Apache Solr, and so on. This would require a fair bit of customization as you’d need to modify all of the databuilder library components that let you load and publish data into Elasticsearch indexes.

3. Frontend service

Permalink to “3. Frontend service”The frontend service, built on React, lets business users interact with the Amundsen web application. Both backend services power the front end with REST APIs and search APIs that interact with neo4j and Elasticsearch, respectively. The frontend service is responsible for displaying all the metadata in a readable and understandable fashion.

The frontend isn’t only a read-only search interface; business users can enrich metadata by adding different types of information to the technical metadata. Tagging, classification, and annotation are some examples of metadata enrichment.

Moreover, the frontend is customizable enough to allow you to build other essential features like SSO on top. Amundsen also lets you integrate with different BI tools and query interfaces to enable features like data preview.

A typical integration in the frontend would involve the following steps:

- Ensure the external application is up and running and is accessible by Amundsen

- Modify the frontend code to interact with the new integration

- Modify the frontend configuration file (or directly update the environment variables)

- Build the frontend service for the new integration to take effect to follow this step-by-step, you can go through our in-depth tutorial on setting up Okta OIDC for Amundsen or setting up Auth0 OIDC for Amundsen.

4. Databuilder utility

Permalink to “4. Databuilder utility”Rather than asking you to build your own metadata extraction and ingestion scripts, Amundsen provides a standard library of scripts with data samples and working examples. You can automate these scripts using a scheduler like Airflow. This utility eventually ends up populating all the data in your data catalog.

When using a data catalog, you want to make sure that your data catalog represents the data from various data sources, such as data warehouses and lakes. Amundsen provides the tools you need to schedule the extraction and ingestion of metadata in a way that doesn’t inject any fatigue at the source end. Let’s look at a sample extraction script that extracts data from PostgreSQL’s data dictionary:

job_config = ConfigFactory.from_dict({

'extractor.postgres_metadata.{}'.format(PostgresMetadataExtractor.WHERE_CLAUSE_SUFFIX_KEY): where_clause_suffix,

'extractor.postgres_metadata {}'.format(PostgresMetadataExtractor.USE_CATALOG_AS_CLUSTER_NAME): True,

'extractor.postgres_metadata.extractor.sqlalchemy.{}'.format(SQLAlchemyExtractor.CONN_STRING): connection_string()})

job = DefaultJob(

conf=job_config,

task=DefaultTask(

extractor=PostgresMetadataExtractor(),

loader=AnyLoader()))

job.launch()

In this script, you only need to provide the connection information and the WHERE clause to filter the schemas and tables you want. You can selectively bring metadata into your data catalog, avoiding temporary and transient tables.

On top of extraction and ingestion, the databuilder utility provides various ways to transform your data to suit your requirements. This is a big plus if you want to get metadata from non-standard or esoteric data sources. Let’s look at snippets of an Airflow DAG that does the following things:

- Extracts data from a PostgreSQL database

- Loads into the neo4j metadata database

- Publishes the data to the Elasticsearch database

Here’s the job configuration for the job that takes care of steps 1 and 2:

job_config = ConfigFactory.from_dict({

f'extractor.postgres_metadata.{PostgresMetadataExtractor.WHERE_CLAUSE_SUFFIX_KEY}': where_clause_suffix,

f'extractor.postgres_metadata.{PostgresMetadataExtractor.USE_CATALOG_AS_CLUSTER_NAME}': True,

f'extractor.postgres_metadata.extractor.sqlalchemy.{SQLAlchemyExtractor.CONN_STRING}': connection_string(),

f'loader.filesystem_csv_neo4j.{FsNeo4jCSVLoader.NODE_DIR_PATH}': node_files_folder,

f'loader.filesystem_csv_neo4j.{FsNeo4jCSVLoader.RELATION_DIR_PATH}': relationship_files_folder,

f'publisher.neo4j.{neo4j_csv_publisher.NODE_FILES_DIR}': node_files_folder,

f'publisher.neo4j.{neo4j_csv_publisher.RELATION_FILES_DIR}': relationship_files_folder,

f'publisher.neo4j.{neo4j_csv_publisher.NEO4J_END_POINT_KEY}': neo4j_endpoint,

f'publisher.neo4j.{neo4j_csv_publisher.NEO4J_USER}': neo4j_user,

f'publisher.neo4j.{neo4j_csv_publisher.NEO4J_PASSWORD}': neo4j_password,

f'publisher.neo4j.{neo4j_csv_publisher.JOB_PUBLISH_TAG}': 'unique_tag',

})

Here’s the job config for the task that takes care of step 3:

elasticsearch_client = es elasticsearch_new_index_key = f’tables{uuid.uuid4()}’ elasticsearch_new_index_key_type = ‘table’ elasticsearch_index_alias = ‘table_search_index’

elasticsearch_client = es

elasticsearch_new_index_key = f'tables{uuid.uuid4()}'

elasticsearch_new_index_key_type = 'table'

elasticsearch_index_alias = 'table_search_index'

job_config = ConfigFactory.from_dict({

f'extractor.search_data.extractor.neo4j.{Neo4jExtractor.GRAPH_URL_CONFIG_KEY}': neo4j_endpoint,

f'extractor.search_data.extractor.neo4j.{Neo4jExtractor.MODEL_CLASS_CONFIG_KEY}':

'databuilder.models.table_elasticsearch_document.TableESDocument',

f'extractor.search_data.extractor.neo4j.{Neo4jExtractor.NEO4J_AUTH_USER}': neo4j_user,

f'extractor.search_data.extractor.neo4j.{Neo4jExtractor.NEO4J_AUTH_PW}': neo4j_password,

f'loader.filesystem.elasticsearch.{FSElasticsearchJSONLoader.FILE_PATH_CONFIG_KEY}': extracted_search_data_path,

f'loader.filesystem.elasticsearch.{FSElasticsearchJSONLoader.FILE_MODE_CONFIG_KEY}': 'w',

f'publisher.elasticsearch.{ElasticsearchPublisher.FILE_PATH_CONFIG_KEY}': extracted_search_data_path,

f'publisher.elasticsearch.{ElasticsearchPublisher.FILE_MODE_CONFIG_KEY}': 'r',

f'publisher.elasticsearch.{ElasticsearchPublisher.ELASTICSEARCH_CLIENT_CONFIG_KEY}':

elasticsearch_client,

f'publisher.elasticsearch.{ElasticsearchPublisher.ELASTICSEARCH_NEW_INDEX_CONFIG_KEY}':

elasticsearch_new_index_key,

f'publisher.elasticsearch.{ElasticsearchPublisher.ELASTICSEARCH_DOC_TYPE_CONFIG_KEY}':

elasticsearch_new_index_key_type,

f'publisher.elasticsearch {ElasticsearchPublisher.ELASTICSEARCH_ALIAS_CONFIG_KEY}':

elasticsearch_index_alias

})

If you want to use different backend storage engines instead of neo4j and Elasticsearch, these out-of-the-box scripts from the databuilder utility need updating. But rest assured; you can plug in other backend systems.

Amundsen features

Permalink to “Amundsen features”Amundsen’s architecture enables three main features to enhance the experience of your business teams working with data. These features focus on discoverability, visibility, and compliance.

Some tools before Amundsen didn’t enjoy wide adoption partly due to a less intuitive user interface and poor user experience, which is why Amundsen created a usable search and discovery interface. Let’s look at how discovery, governance, and lineage work in Amundsen.

1. Data discovery

Permalink to “1. Data discovery”Data discovery without a data catalog involves searching and sorting through Confluence documents, Excel spreadsheets, Slack messages, source-specific data dictionaries, ETL scripts, and whatnot.

Amundsen approaches this problem by centralizing the technical data catalog and enriching it with business metadata. This allows business teams to have a better view of the data, how it is currently used, and how it can be used.

The data discovery engine is powered by a full-text search engine that indexes data stored in the persistent backend by the metadata service. Amundsen handles the continuous updates to the search index that give you the most up-to-date view of the data.

The default metadata model stores basic data dictionary metadata, tags, classifications, comments, etc. You can customize the metadata model to add more fields by changing the metadata service APIs and the database schema.

All of this is exposed to the end user with an intuitive and easily usable web interface, where the end user can search the metadata and enrich it.

2. Data governance

Permalink to “2. Data governance”The other major problem that Lyft’s engineering team attacked was dealing with security and compliance around data handling. Data governance helps you answer questions like who owns the data, who should have access to the data, and how the data can be shared within the organization and outside. Amundsen uses the concept of owners, maintainers, and frequent users to answer the questions mentioned above.

Amundsen’s job doesn’t stop displaying what you can access and can’t. It can integrate with your authentication and authorization to provide and restrict access to data based on the policies in place.

Moreover, when dealing with PII (personally identifiable information) and PHI (personal health information), you can define mask and hide data and restrict access based on compliance standards like GDPR, CCPA, and HIPAA.

3. Data lineage

Permalink to “3. Data lineage”Data lineage can be seen branching out from the data discovery and visibility aspect of data catalogs; however, it has data governance aspects too. Data lineage tells the story of the data - where it came from, how it has changed over its journey to its destination, and how reliable it is. This visibility of the flow of data builds trust within the system and helps debug when an issue arises.

Data lineage has always been there. You could always go to the ETL scripts, stored procedures, and your scheduler jobs to infer data lineage manually, but that was just limited to the engineers and mainly used to debug issues and build on top of the existing ETL pipelines.

A visual representation of data lineage opens it up for use by business teams. Also, the business teams can contribute to the lineage and add context and annotations both for themselves and engineers.

Data lineage builds trust by enabling transparency around data within the organization, which was the third key problem that Amundsen was solving. Many of the popular tools in the modern data stack have automated the collection of data lineage. Amundsen can get lineage information directly from these tools, store it in the backend, and index it to be exposed by the web and search interface.

How to set up Amundsen

Permalink to “How to set up Amundsen”Setting up Amundsen on any cloud platform is straightforward. Irrespective of the infrastructure you are using, you’ll end up going through the following steps when installing Amundsen:

- Create a virtual machine (VM)

- Configure networking to enable public access to Amundsen

- Log into the VM and install the basic utilities

- Install Docker, and Docker Compose

- Clone the Amundsen GitHub repository

- Deploy Amundsen using Docker compose

- Load sample data using databuilder

Here’s the detailed guide for you to follow: Amundsen Setup Guide

The Amundsen installation process is detailed in this extensive walkthrough. You can check out cloud platform-specific guides listed below:

Other open-source alternatives to Amundsen

Permalink to “Other open-source alternatives to Amundsen”At the beginning of the article, we discussed the timeline of open-source data catalogs. Some of the data catalogs mentioned in that timeline are still active and going, while others, like Netflix’s Metacat and WeWork’s Marquez, haven’t seen wide adoption. Since adoption is one of the key metrics while assessing open-source projects, you are left with projects like Apache Atlas and DataHub that might be worth your attention.

Other up-and-coming open-source alternatives like OpenMetadata and OpenDataDiscovery are also worth considering because of their additional features on top of the basic data catalog.

Look out for other open-source data catalogs, as more will keep coming, given it is still a new area in data and analytics engineering.

How organizations making the most out of their data using Atlan

Permalink to “How organizations making the most out of their data using Atlan”The recently published Forrester Wave report compared all the major enterprise data catalogs and positioned Atlan as the market leader ahead of all others. The comparison was based on 24 different aspects of cataloging, broadly across the following three criteria:

- Automatic cataloging of the entire technology, data, and AI ecosystem

- Enabling the data ecosystem AI and automation first

- Prioritizing data democratization and self-service

These criteria made Atlan the ideal choice for a major audio content platform, where the data ecosystem was centered around Snowflake. The platform sought a “one-stop shop for governance and discovery,” and Atlan played a crucial role in ensuring their data was “understandable, reliable, high-quality, and discoverable.”

For another organization, Aliaxis, which also uses Snowflake as their core data platform, Atlan served as “a bridge” between various tools and technologies across the data ecosystem. With its organization-wide business glossary, Atlan became the go-to platform for finding, accessing, and using data. It also significantly reduced the time spent by data engineers and analysts on pipeline debugging and troubleshooting.

A key goal of Atlan is to help organizations maximize the use of their data for AI use cases. As generative AI capabilities have advanced in recent years, organizations can now do more with both structured and unstructured data—provided it is discoverable and trustworthy, or in other words, AI-ready.

Tide’s Story of GDPR Compliance: Embedding Privacy into Automated Processes

Permalink to “Tide’s Story of GDPR Compliance: Embedding Privacy into Automated Processes”- Tide, a UK-based digital bank with nearly 500,000 small business customers, sought to improve their compliance with GDPR’s Right to Erasure, commonly known as the “Right to be forgotten”.

- After adopting Atlan as their metadata platform, Tide’s data and legal teams collaborated to define personally identifiable information in order to propagate those definitions and tags across their data estate.

- Tide used Atlan Playbooks (rule-based bulk automations) to automatically identify, tag, and secure personal data, turning a 50-day manual process into mere hours of work.

Book your personalized demo today to find out how Atlan can help your organization in establishing and scaling data governance programs.

FAQs about Amundsen Data Catalog

Permalink to “FAQs about Amundsen Data Catalog”1. What is the Amundsen Data Catalog?

Permalink to “1. What is the Amundsen Data Catalog?”The Amundsen Data Catalog is an open-source data catalog developed by Lyft. It enhances data discovery, visibility, and governance by providing a centralized platform for managing metadata and data assets.

2. How does the Amundsen Data Catalog improve data discovery?

Permalink to “2. How does the Amundsen Data Catalog improve data discovery?”Amundsen improves data discovery by centralizing metadata and enriching it with business context. This allows users to easily search and access relevant data across various sources, streamlining the data exploration process.

3. What are the key features of the Amundsen Data Catalog?

Permalink to “3. What are the key features of the Amundsen Data Catalog?”Key features of the Amundsen Data Catalog include a flexible architecture, metadata service, search service, and a user-friendly frontend. It also supports data lineage and integrates with various data sources for comprehensive data management.

4. How can organizations implement the Amundsen Data Catalog effectively?

Permalink to “4. How can organizations implement the Amundsen Data Catalog effectively?”Organizations can implement Amundsen by following a structured setup process, which includes creating a virtual machine, installing necessary utilities, and deploying the catalog using Docker. Detailed guides are available for specific cloud platforms.

5. What are the benefits of using the Amundsen Data Catalog for data governance?

Permalink to “5. What are the benefits of using the Amundsen Data Catalog for data governance?”Amundsen enhances data governance by providing clear ownership and access controls for data assets. It helps organizations comply with data regulations and ensures that sensitive information is managed securely.

6. How does the Amundsen Data Catalog support data lineage and auditing?

Permalink to “6. How does the Amundsen Data Catalog support data lineage and auditing?”Amundsen supports data lineage by tracking the flow of data from its source to its destination. This visibility helps organizations understand data transformations and ensures compliance with auditing requirements.

Conclusion

Permalink to “Conclusion”This article walked you through Amundsen’s architecture, features, technical capabilities, and use cases. It also discussed the possibility of customizing Amundsen to suit your needs and requirements.

For engineering-first teams, using Amundsen might be a good option, considering that it requires a fair bit of customization to build some basic security, privacy, and user experience features on top.

Related Reads

Permalink to “Related Reads”- Open Source Data Catalog - List of 6 Popular Tools to Consider in 2025

- Apache Atlas: Origins, Architecture, Capabilities, Installation, Alternatives & Comparison

- Netflix Metacat: Origin, Architecture, Features & More

- DataHub: LinkedIn’s Open-Source Tool for Data Discovery, Catalog, and Metadata Management

- Amundsen Demo: Explore Amundsen in a Pre-configured Sandbox Environment

- Amundsen Set Up Tutorial: A Step-by-Step Installation Guide Using Docker

- DataHub Set Up Tutorial: A Step-by-Step Installation Guide Using Docker

- How to Install Apache Atlas?: A Step-by-Step Setup Guide

- How To Set Up Okta OIDC Authentication in Amundsen

- Amundsen Data Lineage - How to Set Up Column level Lineage Using dbt

- Amundsen vs. DataHub: Which Data Discovery Tool Should You Choose?

- Amundsen vs. Atlas: Which Data Discovery Tool Should You Choose?

- Databook: Uber’s In-house Metadata Catalog Powering Scalable Data Discovery

- Step-By-Step Guide to Configure and Set up Amundsen on AWS

- Airbnb Data Catalog: Democratizing Data With Dataportal

- Amundsen Alternatives – DataHub, Metacat, and Apache Atlas

- Lexikon: Spotify’s Efficient Solution For Data Discovery And What You Can Learn From It

- OpenMetadata: Design Principles, Architecture, Applications & More

- OpenMetadata vs. DataHub: Compare Architecture, Capabilities, Integrations & More

- OpenMetadata vs. Amundsen: Compare Architecture, Capabilities, Integrations & More

- Amundsen Data Catalog: Understanding Architecture, Features, Ways to Install & More

- Open Data Discovery: An Overview of Features, Architecture, and Resources

- Atlan vs. DataHub: Which Tool Offers Better Collaboration and Governance Features?

- Atlan vs Amundsen: A Comprehensive Comparison of Features, Integration, Ease of Use, Governance, and Cost for Deployment

- Pinterest Querybook 101: A Step-by-Step Tutorial and Explainer for Mastering the Platform’s Analytics Tool

- Marquez by WeWork: Origin, Architecture, Features & Use Cases for 2025

- Atlan vs. Apache Atlas: What to Consider When Evaluating?

- Build vs. Buy Data Catalog: What Should Factor Into Your Decision Making?

- Magda Data Catalog: An Ultimate Guide on This Open-Source, Federated Catalog

- OpenMetadata vs. OpenLineage: Primary Capabilities, Architecture & More

- OpenMetadata Ingestion Framework, Workflows, Connectors & More

- 6 Steps to Set Up OpenMetadata: A Hands-On Guide

- Apache Atlas Alternatives: Amundsen, DataHub, and Metacat

- Guide to Setting up OpenDataDiscovery

Share this article