Data Lineage Best Practices: A Maturity Framework for Data Teams

Data lineage quick reference

| Data lineage quick reference | |

|---|---|

| What it is | A set of proven approaches for implementing, maintaining and operationalizing data lineage across the full data stack |

| Who it’s for | Data platform leads, data engineers, data governance officers, CDOs Coverage target — 90%+ of production assets with documented lineage |

| Key integrations | dbt, Airflow, Snowflake, Databricks, Tableau, Looker, Power BI |

| Compliance relevance | GDPR, BCBS 239, SOX, CCPA, EU AI Act |

| Primary use cases | Impact analysis, root cause analysis, compliance audit trails, AI governance |

This guide covers the four-stage lineage maturity model, six best practices for each transition and how to evaluate whether your current implementation is fit for purpose.

The Friday Afternoon Problem

Permalink to “The Friday Afternoon Problem”It is Friday at 4 PM. A dashboard your CFO checks every Monday morning shows revenue down 40%. Your team has roughly three hours to figure out whether this is a real business event or a data pipeline failure. Without lineage, this is an archaeological dig: someone opens Snowflake, reverse-engineers a SQL model, searches Slack for context on a table name nobody recognizes, and eventually discovers that a dbt model was refactored last Tuesday and silently dropped a join condition. Three hours of detective work for a five-minute fix.

Most data teams already know that lineage matters. The mistake is treating “best practices” as a universal checklist. The practices that make sense for a team running automated column-level lineage across 200 dbt models are irrelevant to a team that still tracks transformations in a spreadsheet. Applying Stage 4 practices to a Stage 1 organization does not accelerate maturity; it creates shelfware.

Find your stage, follow the practices that apply, and know exactly what comes next. Each stage builds on the one before it, and each includes the specific gate conditions you need to clear before advancing.

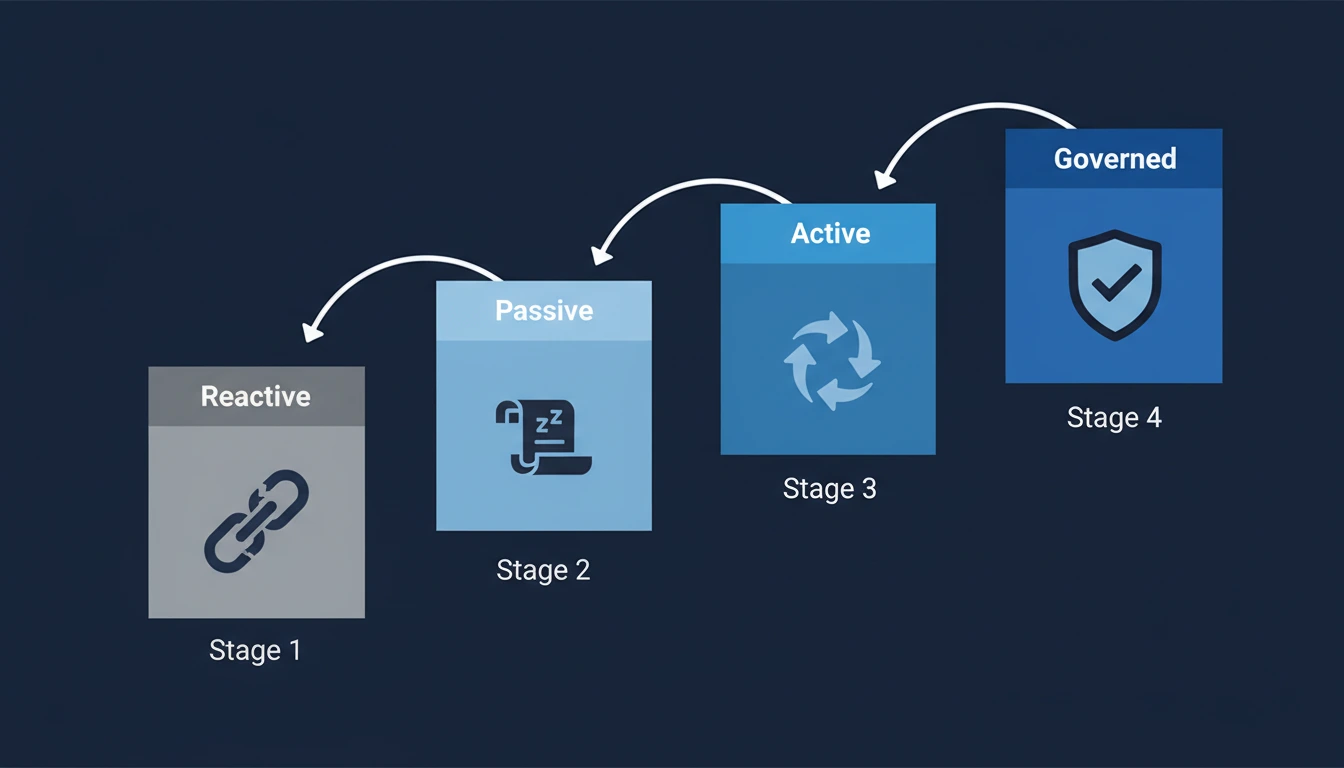

What are the four stages of the data lineage maturity model?

Permalink to “What are the four stages of the data lineage maturity model?”Most data lineage content presents a flat checklist of best practices. The problem is that the right practice depends entirely on where your organization currently is. A team implementing their first lineage tool has different priorities than a team scaling lineage across a 50-source enterprise stack. A more useful frame is a maturity model that maps which practices apply at which stage.

| Stage | Name | Characteristic behavior | Coverage |

|---|---|---|---|

| Stage 1 | Reactive | Lineage is queried only when something breaks | <20% of assets |

| Stage 2 | Passive | Lineage is documented but not actively used | 20–50% of assets |

| Stage 3 | Active | Lineage is surfaced in daily workflows by producers and consumers | 50–85% of assets |

| Stage 4 | Governed | Lineage satisfies regulatory audit, is policy-enforced and decision-traceable | 90%+ of assets |

Most organizations today are at Stage 1 or Stage 2. Most “best practices” articles address only the transition from Stage 1 to Stage 2. The practices below cover the full path to Stage 4, which is where lineage delivers its highest business and compliance value.

Data lineage maturity model: four stages from Reactive to Governed. Image by Atlan.

Best practice 1: Build cross-system coverage before optimizing depth

Permalink to “Best practice 1: Build cross-system coverage before optimizing depth”The most common lineage failure is optimizing within one system while leaving the broader stack dark. Teams instrument detailed lineage inside Snowflake or Databricks, then stop at the boundaries of those platforms. The result: lineage that looks complete in a demo but breaks the moment an analyst traces a metric back to a source system or forward to a dashboard.

What cross-system coverage means in practice:

Source to warehouse: Lineage must trace data from the operational systems and external sources where it originates, through ingestion pipelines, into the warehouse or lakehouse.

Warehouse through transformations: dbt models, Spark jobs and SQL transformations must be traced at table level at minimum, column level where compliance or impact analysis requires it.

Transformations to BI: The final mile (Tableau workbooks, Looker explores, Power BI reports) must be connected. Most implementations fail here because BI tools require separate connectors that many lineage tools do not provide natively.

Diagnostic question: Can a data consumer trace any number in a production dashboard back to its source system without leaving your lineage tool? If not, your coverage is not yet cross-system.

Teams that achieve end-to-end coverage from source to BI report significantly faster root cause analysis and higher trust in downstream metrics. The transition from Stage 1 to Stage 2 almost always begins here.

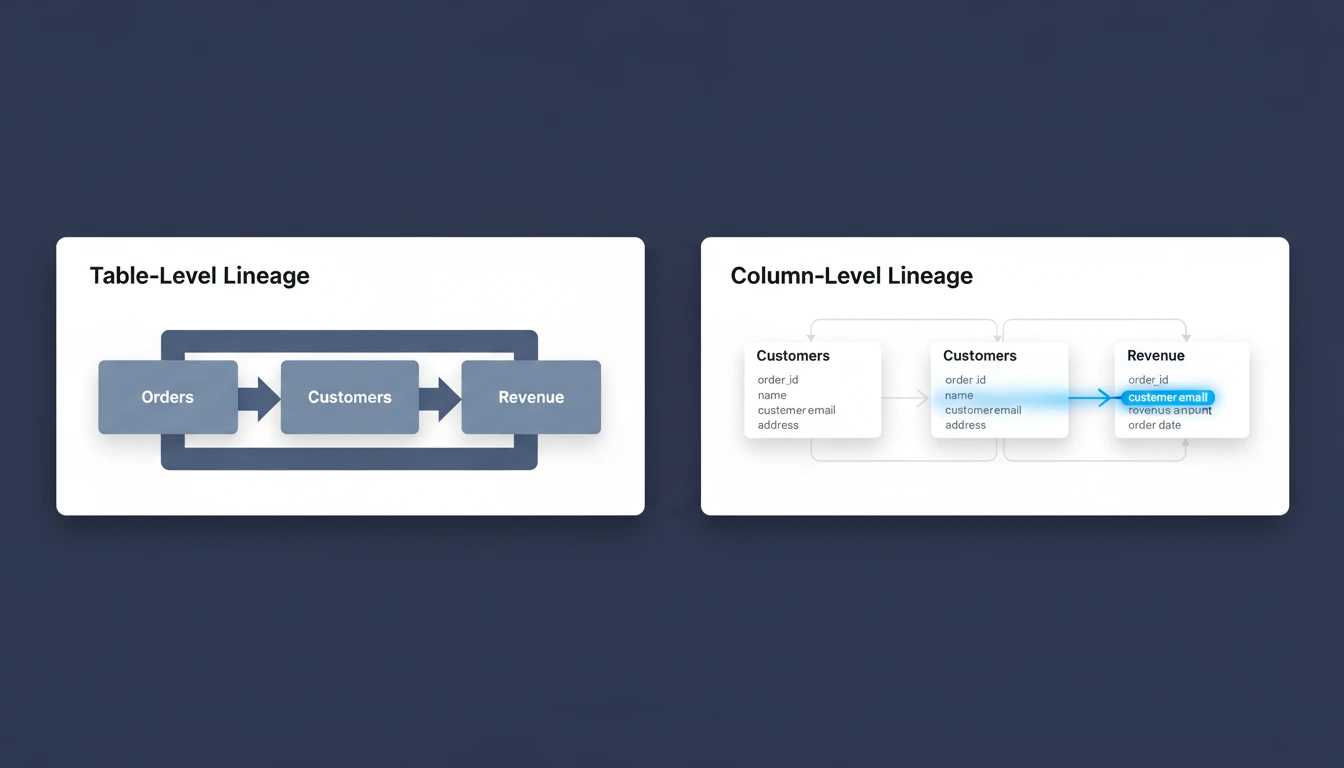

Best practice 2: Prioritize column-level lineage for compliance and AI

Permalink to “Best practice 2: Prioritize column-level lineage for compliance and AI”Table-level lineage tells you that orders_table feeds revenue_report. Column-level lineage tells you that the net_revenue field in that report is calculated from gross_revenue minus discount_amount from three upstream tables, with a specific transformation applied in a dbt model.

That distinction matters in four specific situations:

- GDPR and CCPA compliance: Regulators require that organizations can identify every system where a personal data field exists and trace how it flows. Table-level lineage cannot answer “show me every place customer_email is stored or transformed.” Column-level lineage can.

- SOX financial controls: Audit trails for financial reporting need to show the complete calculation path for key metrics. An auditor asking “how is this revenue figure derived?” requires column-level lineage through every transformation layer.

- AI training data provenance: When a machine learning model is trained on data, the EU AI Act and emerging AI governance frameworks require documentation of where training data came from and how it was processed. This is a column-level question, not a table-level one.

- Root cause analysis at scale: When a data quality issue appears in a downstream metric, column-level lineage reduces the mean time to identify the root cause from hours to minutes by isolating exactly which transformation introduced the anomaly.

Column-level lineage requires more investment to implement and maintain. The practical guidance: implement table-level lineage everywhere first, then prioritize column-level for assets that touch compliance requirements, financial calculations, AI training pipelines and high-visibility business metrics.

Column-Level vs. Table-Level diagram. Image by Atlan.

Best practice 3: Automate lineage capture rather than document it manually

Permalink to “Best practice 3: Automate lineage capture rather than document it manually”Manual lineage documentation decays faster than teams can maintain it. A schema change in Snowflake, a new dbt model, a modified Airflow DAG: each one creates a gap between documented lineage and actual lineage. Within three months of a manual documentation effort, coverage typically degrades from the initial baseline. Automated lineage capture avoids this decay by continuously rebuilding lineage from the systems themselves.

Three automation approaches, in order of reliability:

- Native integrations: Direct API connections to data platforms (Snowflake, Databricks, BigQuery) that extract lineage metadata from query history, transformation definitions and system catalogs. This is the most accurate method because it reads lineage from the source of truth.

- SQL parsing: Parsing SQL statements in query logs and transformation files to infer lineage relationships. More flexible than native integrations but requires validation because complex SQL (subqueries, CTEs, dynamic SQL) can produce inaccurate results.

- Runtime capture: Intercepting data movement at execution time through pipeline frameworks like Apache Airflow or Apache Spark. Accurate for pipeline lineage but requires instrumentation of each orchestration tool.

The practical standard for a mature lineage practice: native integrations handle warehouse and transformation layer lineage, SQL parsing fills gaps in legacy SQL environments and runtime capture covers orchestration-layer lineage. Manual documentation is reserved only for business context: the “why” behind a transformation, not the “what.”

Diagnostic question: How long after a schema change does your lineage reflect that change? If the answer is longer than 24 hours, your lineage automation is incomplete.

Best practice 4: Surface lineage in context to drive adoption beyond data engineers

Permalink to “Best practice 4: Surface lineage in context to drive adoption beyond data engineers”A lineage graph that lives in a separate tool, accessed only when something breaks, is Stage 1 lineage. Stage 3 lineage is surfaced contextually: in search results when an analyst looks up a dataset, in alert notifications when a schema change affects downstream reports, in governance workflows when a policy applies to data flowing through a specific pipeline.

The adoption failure is common: organizations invest in lineage tooling, build comprehensive coverage and then find that only data engineers use it, and only reactively. The root cause is that lineage is not surfaced where data consumers work.

Four patterns for contextual lineage surfacing:

- In the data catalog: When a data steward or analyst views any table or column, lineage should be immediately visible without a separate navigation step. This turns lineage from a tool into a property of every data asset.

- In impact analysis workflows: When a data engineer proposes a schema change, the lineage graph should automatically surface every downstream asset affected (dashboards, models, pipelines) before the change is made.

- In data quality alerts: When a data quality monitor detects an anomaly, lineage should enable automatic upstream tracing to show which source or transformation introduced the issue.

- In governance decisions: When a data governance officer reviews access requests or policy compliance, lineage should show the data’s flow path to confirm that policy applies to all downstream uses.

Active metadata is the architectural pattern that enables this. Rather than lineage being a static graph updated periodically, active metadata means lineage relationships update continuously and surface in context across the tools data teams use every day.

Best practice 5: Build compliance-grade lineage from the start

Permalink to “Best practice 5: Build compliance-grade lineage from the start”Technical lineage and compliance lineage have different requirements. Technical lineage tracks data movement for operational purposes: impact analysis, root cause diagnosis, pipeline debugging. Compliance lineage must be auditable: it needs to show regulators a documented, timestamped record of how specific data elements flowed through specific systems during a specific time period.

Organizations that treat these as the same requirement typically end up rebuilding their lineage implementation when compliance requirements escalate. The best practice is to design for compliance-grade lineage from the start, even if compliance is not yet an immediate requirement.

What compliance-grade lineage requires:

| Requirement | Technical lineage | Compliance-grade lineage |

|---|---|---|

| Granularity | Column level where needed | Column level required |

| Timestamping | Current state | Point-in-time historical snapshots |

| Coverage | Core production assets | All assets that touch regulated data |

| Auditability | Human-readable visualization | Machine-exportable audit trail |

| Policy enforcement | None required | Lineage must reflect active policies |

| BI layer | Optional | Required for regulated reporting |

For organizations subject to BCBS 239, the regulation requires that banks demonstrate complete data lineage for risk data from source to report. For GDPR, Article 30 records of processing activities effectively require lineage at the personal data field level. For SOX Section 302 and 906, executive certification of financial statements creates an implicit requirement for calculation-level lineage.

The practical implication: if your organization operates in financial services, healthcare or any regulated industry, or if you plan to deploy AI systems that touch regulated data, build lineage with compliance requirements in mind from the first implementation.

Best practice 6: Prepare lineage for the AI era

Permalink to “Best practice 6: Prepare lineage for the AI era”Data lineage is no longer only a governance and operations tool. It has become infrastructure for responsible AI deployment, and the requirements are different from traditional lineage use cases.

When an AI model makes a decision, three questions arise that lineage must be able to answer:

- What data was this model trained on? Training data provenance requires column-level lineage tracing from training datasets back to source systems, including all preprocessing and transformation steps.

- What data is this model running on? Inference data lineage must track the same path for production data, confirming that production data meets the same quality and governance standards as training data.

- When data changes upstream, which models are affected? Automated impact analysis for AI systems requires lineage that connects source data changes to the models that depend on that data.

The EU AI Act, which came into force in August 2024, creates explicit documentation requirements for high-risk AI systems that map directly to lineage capabilities. Article 11 requires technical documentation that includes “a description of the training methodologies and training data sets used.” Column-level lineage from source systems through preprocessing pipelines to training datasets is the mechanism that satisfies this requirement.

Beyond regulatory compliance, AI agents operating within enterprise data environments need lineage to be machine-readable, not just human-navigable. An AI system deciding whether to use a dataset needs to query that dataset’s lineage programmatically to confirm it meets quality and governance criteria. Static lineage visualizations designed for human inspection cannot serve this use case.

The practical implication: lineage infrastructure built for traditional governance use cases needs to be extended with API access, machine-readable formats and integration with AI governance workflows before it can serve as AI governance infrastructure.

How does Atlan approach data lineage?

Permalink to “How does Atlan approach data lineage?”Most organizations that implement data lineage reach Stage 2 (passive documentation) and stall. The lineage exists, coverage is reasonable, but adoption outside the data engineering team is low. The lineage graph is consulted when something breaks, not as part of daily work.

The root cause is architectural. When lineage lives in a standalone tool that requires separate navigation and manual investigation, it never becomes part of how data teams work. It remains infrastructure, not intelligence.

Atlan’s approach to data lineage is built on the active metadata model: lineage relationships are continuously rebuilt from native integrations with your data stack and surfaced contextually wherever your team works. When an analyst views a dataset in the catalog, lineage is immediately visible. When a schema change is detected, impact analysis runs automatically and alerts the owners of affected downstream assets. When a governance policy applies to a data element, lineage propagates that policy context to all downstream uses of that element.

For cross-system coverage, Atlan connects 100+ data sources including warehouses, transformation layers, orchestration tools and BI platforms (Tableau, Looker and Power BI included) so lineage does not stop at tool boundaries. Column-level lineage is available across the full stack, enabling the compliance audit trails and AI governance documentation that table-level lineage cannot produce.

Most organizations see value from Atlan’s automated lineage within their first month, even with partial coverage, and then progressively advance from passive documentation to active, workflow-embedded lineage as they connect their existing stack and activate impact analysis workflows.

Explore Atlan’s Data Lineage Capabilities →

What are FAQs about data lineage best practices?

Permalink to “What are FAQs about data lineage best practices?”What is the difference between table-level and column-level data lineage?

Permalink to “What is the difference between table-level and column-level data lineage?”Table-level lineage tracks which tables feed which other tables through pipelines and transformations. Column-level lineage tracks which specific fields contribute to which other fields, including the transformation logic applied. Column-level lineage is required for GDPR field-level compliance, SOX financial calculation audits, AI training data provenance documentation and precise root cause analysis. Table-level lineage is sufficient for basic impact analysis and pipeline documentation.

How do you measure the completeness of your data lineage?

Permalink to “How do you measure the completeness of your data lineage?”Lineage completeness is measured as the percentage of production data assets with documented lineage relationships. Organizations at Stage 2 maturity typically cover 20-50% of assets. Stage 4 maturity requires 90%+ coverage. Key metrics include: percentage of tables with upstream lineage, percentage of business-critical assets with column-level lineage, time-to-update when schema changes occur and percentage of BI reports with end-to-end source-to-report lineage.

What tools are used for data lineage tracking?

Permalink to “What tools are used for data lineage tracking?”Data lineage tools fall into three categories: standalone lineage platforms (OpenLineage, Marquez), data catalog platforms with built-in lineage (Atlan, Collibra, Alation) and platform-native lineage within cloud warehouses (Snowflake Access History, Databricks Unity Catalog, Google Dataplex). Data catalog platforms with native lineage integrations typically provide better cross-system coverage because they connect lineage to broader governance workflows rather than treating it as an isolated capability.

How does data lineage support GDPR compliance?

Permalink to “How does data lineage support GDPR compliance?”GDPR compliance requires organizations to maintain records of processing activities under Article 30, respond to data subject access requests and demonstrate that personal data is handled according to stated purposes. Data lineage supports all three by documenting exactly which personal data fields exist in which systems, how they flow through transformations and pipelines and which downstream reports or systems consume them. Column-level lineage is required because GDPR obligations apply at the field level, not the table level.

What is the most common reason data lineage implementations fail?

Permalink to “What is the most common reason data lineage implementations fail?”The most common failure is achieving technical coverage without driving adoption. Teams invest in building lineage documentation but do not surface it in the workflows where data consumers work. The lineage exists but is consulted only reactively, when something breaks. The solution is not more coverage but contextual surfacing: lineage visible in the data catalog, impact analysis alerts triggered automatically and governance workflows that use lineage as a decision input, not just a reference artifact.

How often should data lineage be updated?

Permalink to “How often should data lineage be updated?”Best-practice automated lineage updates continuously as schema changes, new transformations and pipeline modifications occur. The target update latency is under 24 hours for production assets, with real-time or near-real-time updates for high-priority assets that feed compliance reporting or business-critical dashboards. Manual lineage documentation, by contrast, typically becomes stale within weeks because it cannot keep pace with the rate of change in modern data stacks.

What is the difference between data lineage and data provenance?

Permalink to “What is the difference between data lineage and data provenance?”Data lineage tracks the movement and transformation of data across systems: the path data takes from source to consumption. Data provenance focuses specifically on the origin and custody history of data: who created it, when and under what conditions. Lineage answers “where has this data been and how was it transformed?” Provenance answers “where did this data come from and who is responsible for it?” In practice, a complete data governance implementation requires both, with lineage providing the technical path and provenance providing the ownership and origin context.

What are key takeaways for data lineage best practices?

Permalink to “What are key takeaways for data lineage best practices?”Data lineage best practices are not a fixed checklist: the right practice depends on where your organization sits in the maturity model. Most teams stall at Stage 2, passive documentation with low adoption, not because their lineage coverage is insufficient but because their lineage is not surfaced in the workflows where it would be used. Moving to Stage 3 and Stage 4 requires automated capture that stays current, cross-system coverage that connects source systems to BI tools and contextual surfacing that makes lineage part of daily data work rather than a tool consulted only when something breaks.

As AI governance requirements mature, particularly under the EU AI Act, lineage infrastructure that was built for human-readable compliance documentation will need to extend to machine-readable, API-accessible formats that AI systems can query directly. Teams that build with this requirement in mind now will avoid a rebuild later.

Book a Demo to See How Atlan Automates Cross-System Lineage →

Share this article

Atlan is the next-generation platform for data and AI governance. It is a control plane that stitches together a business's disparate data infrastructure, cataloging and enriching data with business context and security.

Data lineage best practices: Related reads

Permalink to “Data lineage best practices: Related reads”- How to Document Data Lineage for Regulatory Audits: Practical steps and templates

- Data Lineage 101: Importance, Use Cases, and Their Role in Governance

- Column-Level Lineage Explained: Why it matters for compliance and AI

- Automated Data Lineage: Making Lineage Work For Everyone

- Data Lineage Tracking | Why It Matters, How It Works & Best Practices for 2026

- Open Source Data Lineage Tools: 5 Popular to Consider

- Data Catalog vs. Data Lineage: Differences, Use Cases, and Evolution