Open Source Data Catalog Tools: Top 5 Software In 2026

You have read the docs, spun up a Docker Compose, and maybe sat through a demo or two. And you’re still not sure which open-source data catalog actually fits your team.

The hard part isn’t finding options; it’s comparing them honestly. Data catalog features look similar. Tools claim broad connector support. But the differences that matter are:

- How much infrastructure does each one needs

- What breaks during upgrades

- How much engineering time are you signing up for

Answers to these are buried in GitHub issues, not landing pages. This guide does that comparison for you. Here are the top 5 tools along with their key strengths and limitations:

| Tool | Best for | GitHub stars | Key strength | Main limitation |

|---|---|---|---|---|

| DataHub (LinkedIn) | Federated architecture, large enterprises | 11.6k | Flexible metadata schema, column-level lineage, connector ecosystem | Infrastructure complexity (Kafka, Elasticsearch, multiple services) |

| OpenMetadata | Modern cloud-native stacks | 8.7k | Integrated discovery, quality, lineage, governance with 84+ connectors | Younger project; some enterprise features are still maturing |

| Apache Atlas | Hadoop ecosystems | 2.1k | Mature governance, taxonomy, tag propagation, fine-grained access | Complex deployment; UI not modern |

| Marquez | Lineage and job/dataset dependency tracking | 2.1k | Real-time lineage via OpenLineage, pipeline traceability | Less focus on discovery or policy enforcement |

| OpenDataDiscovery (ODD) | ML and data science workflows | 1.4k | Federated search, metadata observability, ML tool integrations | Smaller community; features still under development |

GitHub star counts, verified as of February 2026, for each project’s GitHub repository.

Which open source data catalogs lead in 2026?

Permalink to “Which open source data catalogs lead in 2026?”In 2026, DataHub and OpenMetadata clearly lead the open-source data catalog space due to large communities and fast release cycles. DataHub has 11k+ GitHub stars, while OpenMetadata has around 8k+. Behind them, Apache Atlas and Marquez serve stable niche use cases, and OpenDataDiscovery targets ML workflows with a smaller community.

Each targets a different architecture and team profile.

| Tool | Stars | Latest stable release | Contributors | Activity level |

|---|---|---|---|---|

| DataHub | 11,600 | v1.4.0.2 | 706 | Very high |

| OpenMetadata | 8,700 | v1.11.8 | 408 | Very high |

| Apache Atlas | 2,100 | v2.4.0 | 143 | Moderate |

| Marquez | 2,100 | v0.50.0 | 106 | Moderate |

| ODD Platform | 1,400 | v0.27.8 | 36 | Low to moderate |

What are the best open source data catalogs? A deep dive

Permalink to “What are the best open source data catalogs? A deep dive”The best open-source data catalogs depend on your use case. DataHub fits large teams needing modular architecture, lineage, and governance, but requires heavy infrastructure. OpenMetadata offers an easier, all-in-one platform with quality and collaboration features. Apache Atlas works best inside Hadoop ecosystems. Marquez focuses purely on lineage tracking, not discovery. OpenDataDiscovery targets ML workflows and federated metadata.

Choose based on scale, stack complexity, and governance maturity rather than popularity alone.

1. DataHub: Best for organizations that need modular metadata architecture, lineage, and federated governance.

Permalink to “1. DataHub: Best for organizations that need modular metadata architecture, lineage, and federated governance.”DataHub evolved from LinkedIn’s internal tool, WhereHows. It celebrated its five-year anniversary with the launch of DataHub 1.0 in January 2025. The v1.4.0 release introduced Context Documents to bring organizational knowledge into the catalog, a redesigned lineage navigator, new summary tabs for domains and glossary terms, and connectors for Google Dataplex, Azure Data Factory, and IBM Db2.

The MCP Server and AI Context Kit now let teams feed organizational knowledge to AI agents that can query DataHub metadata directly.

It’s an ideal choice for you if you have a dedicated platform engineering team of two or more engineers. Ensure they’re comfortable managing Kafka, Elasticsearch, and Kubernetes. If you lack this capacity, you might spend weeks on initial setup before ingesting your first metadata.

Below are some of its features that make it a popular choice among open source data catalog software.

- Modular, service-oriented architecture supporting both push and pull metadata ingestion

- Interactive, column-level lineage graph with impact analysis

- Role-based access control for metadata

- Support for data contracts

- Wide connector ecosystem including Snowflake, BigQuery, dbt, Airflow, Databricks, and more.

- MCP Server and AI Context Documents for bringing organizational knowledge into the catalog (v1.4.0)

Key limitations:

- Infrastructure complexity requires Kafka, Elasticsearch/OpenSearch, and multiple services

- Some integrations need custom engineering, making deployments resource-intensive

- Data quality and observability features continue evolving in the open source edition

Latest release: v1.4.0.2, February 2026. (GitHub)

DataHub’s architecture runs several interconnected services. Before deploying, your team should plan for the following stack:

Infrastructure prerequisites

Permalink to “Infrastructure prerequisites”| Component | Purpose | Notes |

|---|---|---|

| Apache Kafka | Real-time metadata event streaming | Core dependency; handles MetadataChangeProposal events |

| Elasticsearch or OpenSearch | Search indexing | OpenSearch 2.19.3+ required for semantic search (v1.4.0) |

| MySQL or PostgreSQL | Primary metadata store | Stores aspect data and system metadata |

| DataHub GMS | Metadata service backend | Central API layer |

| DataHub Frontend | React-based web UI | Separate container |

| Schema Registry | Schema management for Kafka | Required for metadata serialization |

Most production deployments use Kubernetes with Helm charts. Docker Compose works well for evaluation, but scaling beyond a proof of concept typically requires Kubernetes expertise.

What users like:

Permalink to “What users like:”- DataHub’s redesigned lineage navigator in v1.4.0 received a positive reception from the community on its GitHub release page. The new summary tabs for domains, glossary terms, and data products give users a quick overview of key entity details.

- The project’s monthly town hall meetings and public roadmap also build community trust, with the 2025 roadmap openly detailing planned features for discovery, observability, and governance.

What needs improvement:

Permalink to “What needs improvement:”- Operational complexity is the most frequently reported pain point on GitHub. One user reported that DataHub v1.2.0 was three to four times slower than v1.1.0 when hard-deleting aspects and restoring indices, with Elasticsearch entering a loop during the process.

- A September 2025 issue describes the reindex job getting stuck in a loop during version upgrades, repeatedly logging document count mismatches.

2. OpenMetadata: Best for teams seeking a unified metadata platform covering governance, lineage, and collaboration

Permalink to “2. OpenMetadata: Best for teams seeking a unified metadata platform covering governance, lineage, and collaboration”OpenMetadata takes a different approach from DataHub. Instead of a modular architecture that teams assemble, it ships a unified platform that covers discovery, governance, quality, profiling, lineage, and collaboration in one package.

Built by the team behind Uber’s data infrastructure, along with the founders of Apache Hadoop and Atlas, OpenMetadata launched with a clear thesis: metadata management shouldn’t require five different tools stitched together.

OpenMetadata suits teams with one to two platform engineers who want a broad feature set without managing a complex infrastructure stack. The lower operational overhead makes it a practical choice for mid-size organizations or startups building their first metadata layer.

Below are some of its features that make it a popular choice among open source data catalog software.

- Built-in data quality tests and data profiling

- Data Contracts for producer-consumer collaboration

- MCP integration for AI agents

- Collaboration features, including comments, domain glossaries, tasks, and announcements

- Simplified architecture (MySQL/PostgreSQL + Elasticsearch) compared to DataHub’s multi-component stack

Key limitations:

- Enterprise RBAC and SSO are still maturing

- Some connectors need customization for edge cases

- Performance at a very large enterprise scale requires tuning

Latest release: v1.11.8, February 2026 (GitHub)

Infrastructure prerequisites

Permalink to “Infrastructure prerequisites”OpenMetadata runs a leaner stack compared to DataHub:

| Component | Purpose | Notes |

|---|---|---|

| MySQL or PostgreSQL | Metadata store | Primary backend; no graph database required |

| Elasticsearch | Search and indexing | Standard deployment |

| OpenMetadata Server | Java-based API and UI server | Single application handles both backend and frontend |

| Airflow (optional) | Ingestion workflow orchestration | Can use the built-in scheduler or external Airflow |

The absence of Kafka and a graph database simplifies deployment. You can get a working instance running in a single afternoon for evaluation.

What users like:

Permalink to “What users like:”The weekly patch release cadence consistently builds confidence in the community. OpenMetadata shipped v1.11.8 in February 2026, with prior patches landing weekly throughout late 2025.

What needs improvement:

Permalink to “What needs improvement:”- Advanced RBAC remains a gap for larger enterprises. A June 2025 feature request asks for customizable ownership roles, granular per-property edit permissions, and federated role management.

- Stability under high concurrency has also surfaced as a concern. An August 2025 issue reports that the Airflow Lineage Backend hangs indefinitely when the OpenMetadata server becomes unresponsive under load.

3. Apache Atlas: Best for organizations heavily invested in Hadoop ecosystems needing strong metadata governance

Permalink to “3. Apache Atlas: Best for organizations heavily invested in Hadoop ecosystems needing strong metadata governance”Apache Atlas holds a special place among open source catalogs. Many companies still use it as a foundation for governance platforms — including Atlan, an enterprise-grade active metadata platform built on a hardened fork of Apache Atlas as its metadata backend.

Originally developed by Hortonworks and donated to Apache, Atlas was one of the first open source tools for metadata governance in Hadoop ecosystems. It’s powered by JanusGraph, Apache Solr, Apache Kafka, and integrates deeply with Apache Ranger for fine-grained access control. The latest release, v2.4.0, shipped in January 2025.

It’s a strong fit for organizations operating in the Hadoop ecosystem and have teams familiar with HBase, Solr, and Kafka administration. If your stack is Snowflake, BigQuery, or Databricks, Atlas will require significant custom extension work.

Below are some of its features that make it a popular choice among open source data catalog software.

- Rich support for taxonomy, classifications, and tag propagation

- Fine-grained metadata access controls and audit tracking

- Deep integrations with the Hadoop stack (Hive, Kafka, HBase)

- Powered by JanusGraph, Apache Solr, Apache Kafka, and Apache Ranger

- Well-documented via the Apache Software Foundation’s Jira project

Key limitations:

- Less focus on discovery workflows for end users

- Heavy and complex to deploy and maintain

- UI feels dated compared to modern alternatives

- Adapting to modern cloud or lakehouse architectures requires custom extensions

- Complex setup for non-Hadoop environments

Latest release: v2.4.0, January 2025 (GitHub)

Infrastructure prerequisites

Permalink to “Infrastructure prerequisites”The infrastructure footprint is the heaviest among all tools on this list. Production deployment typically requires a Hadoop cluster or equivalent infrastructure.

| Component | Purpose | Notes |

|---|---|---|

| Apache Kafka | Event notifications and hooks | Required for real-time metadata capture |

| Apache Solr | Search indexing | Full-text search across entities |

| JanusGraph | Graph metadata store | Stores entity relationships and lineage |

| Apache HBase (or BerkeleyDB) | JanusGraph storage backend | HBase for production; BerkeleyDB for evaluation |

| Apache Ranger (optional) | Policy enforcement | Integrates with Atlas for tag-based access control |

What users like:

Permalink to “What users like:”Atlas’s depth of governance remains unmatched for Hadoop environments. Tag propagation and classification inheritance allow governance rules to flow automatically through lineage paths.

What needs improvement:

Permalink to “What needs improvement:”- Deployment complexity is a persistent concern. A blog post documenting the build process for Atlas v2.3.0 describes the author “fumbling” through installation due to a lack of documentation for recent releases. These challenges might persist in v2.4.0 for teams outside the Hadoop ecosystem.

- A security vulnerability (CVE-2024-46910) disclosed in February 2025 exposed XSS issues in Atlas versions 2.3.0 and earlier, requiring an upgrade to v2.4.0 to remediate. This highlights the importance of staying current, which is more difficult given Atlas’s slower release cadence.

4. Marquez: Best for teams focused on lineage, provenance, and job/dataset dependency tracking

Permalink to “4. Marquez: Best for teams focused on lineage, provenance, and job/dataset dependency tracking”If your primary question is “how does data move through our pipelines, and what breaks when something changes?” Marquez answers it with less infrastructure than other tools on this list.

Created by WeWork and now a graduated project under the LF AI & Data Foundation, Marquez also pioneered OpenLineage, the open standard for data lineage metadata collection. As the reference implementation of that standard, Marquez works out of the box with every OpenLineage integration: Apache Airflow, Apache Spark, Apache Flink, dbt, and Dagster.

Marquez fits data engineering teams that already use Airflow, Spark, or dbt and need real-time lineage without investing in a full catalog platform.

When to pair Marquez with another tool

Permalink to “When to pair Marquez with another tool”Marquez excels at lineage but does not replace a data catalog. Teams that need discovery, search, business glossaries, governance, or data quality monitoring should pair Marquez with DataHub, OpenMetadata, or a commercial platform.

The OpenLineage standard makes this integration straightforward since both DataHub and OpenMetadata can consume OpenLineage events.

Below are some standout features of Marquez.

- OpenLineage-compliant metadata server for real-time lineage collection and visualization

- Unified metadata UI showing job-to-dataset relationships and execution lineage

- Flexible lineage API for automating impact analysis, backfills, and root-cause tracing

- Integration with modern data stack tools like dbt and Apache Airflow

- New Data Observability dashboard and GraphQL endpoint (beta) in v0.50.0

Key limitations:

- Less emphasis on discovery or governance beyond lineage

- Not feature-rich in policy enforcement, access control, or business metadata

- Smaller community and ecosystem compared to DataHub or OpenMetadata

Latest release: v0.50.0, October 2024 (GitHub)

Infrastructure prerequisites

Permalink to “Infrastructure prerequisites”Marquez runs the leanest stack of any tool on this list:

| Component | Purpose | Notes |

|---|---|---|

| PostgreSQL | Metadata and lineage event store | Only required database |

| Marquez API server | Java-based backend | Handles OpenLineage event ingestion and API queries |

| Marquez Web UI | React-based frontend | Lineage visualization and dataset browsing |

What users like:

Permalink to “What users like:”The OpenLineage integration is Marquez’s strongest differentiator. As the reference implementation of the OpenLineage standard, Marquez works out of the box with every OpenLineage integration: Apache Airflow, Apache Spark, Apache Flink, dbt, and Dagster.

What needs improvement:

Permalink to “What needs improvement:”The GitHub issues page shows a slower response time than DataHub or OpenMetadata. As of early 2026, open issues dating back to May 2025 remain unaddressed.

5. OpenDataDiscovery: Best for organizations needing federated search and metadata discovery with a focus on ML workflows.

Permalink to “5. OpenDataDiscovery: Best for organizations needing federated search and metadata discovery with a focus on ML workflows.”Most open source catalogs treat ML metadata as an afterthought. Tables and dashboards get first-class support. Models, experiments, and feature stores get bolted on later, if at all. OpenDataDiscovery (ODD) flips that priority.

Developed by Provectus around 2021, ODD was initially designed for ML teams but later expanded to cover data engineering and data science use cases. Its federated architecture uses lightweight collector agents that push metadata to the platform via REST API, avoiding the need for a centralized ingestion orchestrator.

If your organization’s primary concern is understanding how data flows from ingestion through ML model training and into production, ODD addresses that niche.

Below are some standout features of open-source data catalog tools.

- Federated data catalog enabling search across data silos

- Ingestion-to-product data lineage

- Metadata health and observability dashboards

- End-to-end microservices lineage

- Integration with data quality tools and ML platforms, including dbt, Snowflake, SageMaker, KubeFlow, and BigQuery

- Available on AWS Marketplace

Key limitations:

- Less community maturity and adoption compared to older projects

- Some features (lineage depth, quality) remain under development

- Connectors and integrations may require custom work

Latest release: v0.27.8, February 2025 (GitHub)

Infrastructure prerequisites

Permalink to “Infrastructure prerequisites”ODD’s architecture is straightforward. The collectors are lightweight agents that push metadata to the platform via REST API, avoiding the need for a centralized ingestion orchestrator.

| Component | Purpose | Notes |

|---|---|---|

| PostgreSQL | Metadata store | Only required database |

| ODD Platform | Java-based backend and UI | Single application container |

| ODD Collectors | Metadata ingestion agents | Separate containers per data source type |

What users like:

Permalink to “What users like:”ML engineers value ODD’s first-class treatment of ML entities. The GitHub repository describes how ODD operates ML entities as “first citizens,” integrating model metadata alongside traditional data assets.

What needs improvement:

Permalink to “What needs improvement:”The small community is the biggest risk factor. The issues page shows limited activity through 2025, with several-month gaps between bug reports.

The operational reality of open source catalogs

Permalink to “The operational reality of open source catalogs”Before committing to any tool, your team should understand the pattern that unfolds after deployment.

Catalog becomes a second product

Permalink to “Catalog becomes a second product”Three to six months of engineering effort is the standard estimate for deploying an open-source catalog. What that number hides is what happens after deployment.

One pattern repeats across teams: the catalog starts as a project and quietly becomes a product. Not because the tools are bad; they aren’t. But running a distributed infrastructure in production surfaces problems that only your team can solve.

Here’s what that looks like in practice, drawn directly from community-reported issues:

Your upgrade blocks your roadmap

Permalink to “Your upgrade blocks your roadmap”A DataHub user upgrading to v1.2.0 found that hard-deleting aspects and restoring Elasticsearch indices had become three to four times slower than in v1.1.0.

For the team running this instance, it means someone has to pause their sprint work and debug an infrastructure problem they didn’t create.

A routine version bump turns into weeks of investigation

Permalink to “A routine version bump turns into weeks of investigation”A separate DataHub issue from September 2025 describes the upgrade reindex job getting stuck in a loop even after applying the recommended workaround.

For teams with large indices, every version upgrade now carries the risk of a multi-day debugging cycle. The knowledge to resolve it lives in GitHub threads, not in a support queue.

Your catalog silently breaks your pipelines

Permalink to “Your catalog silently breaks your pipelines”An OpenMetadata issue from August 2025 documents a particularly insidious failure. The OpenMetadata server becomes unresponsive under high concurrent load, and the Airflow Lineage Backend hangs indefinitely.

The team that reported this ran extensive load testing, contributed PRs for configurable retries, added custom logging, and worked on Helm chart scaling, which is months of engineering effort just to diagnose and work around a client timeout that didn’t exist. Their conclusion: the lineage backend lacked a basic read timeout, so a connected but unresponsive server would block Airflow tasks indefinitely.

None of this means these tools are bad. But each issue represents a cost. In a managed platform, these are the vendor’s problem. In open source, they’re yours.

The real cost of “free”: A back-of-napkin calculation

Permalink to “The real cost of “free”: A back-of-napkin calculation”Open-source catalogs have no licensing fees. But “free” has a price tag; it’s just distributed across your payroll and infrastructure budget, where it’s harder to see.

Here’s what a typical open-source catalog deployment actually costs in Year 1:

| Cost category | Estimate | Basis |

|---|---|---|

| Engineering time (deployment) | $90,000–$135,000 | 2 engineers × 3–6 months at ~$180K loaded cost, 50% allocation |

| Engineering time (ongoing ops) | $54,000–$108,000/year | 1–2 engineers × 30% time on upgrades, connector fixes, debugging |

| Infrastructure | $24,000–$60,000/year | Kafka, Elasticsearch, Kubernetes clusters, database, and monitoring |

| Opportunity cost | Hard to quantify | Features not built, governance workflows not automated, adoption delayed |

| Year 1 total (excluding opportunity cost) | $168,000–$303,000 |

These numbers shift based on your cloud provider, team salaries, and deployment complexity. But they’re directionally right for a mid-size organization running DataHub or OpenMetadata in production.

Before choosing open source, run this calculation with your actual salaries and cloud rates. If the total surprises you, that’s the conversation worth having.

How can you pick and deploy an open source data catalog?

Permalink to “How can you pick and deploy an open source data catalog?”Pick and deploy an open-source data catalog in six steps. First, define must-have capabilities: metadata scope, lineage depth, governance, glossary, and connectors. Next, check project health using GitHub activity, documentation, releases, and community support. Run a small pilot in one business domain and involve both engineers and analysts. Then automate ingestion and access policies. Track adoption and ROI through usage metrics. Finally, plan long-term ownership, maintenance effort, and infrastructure cost before scaling.

Let’s deep dive into the six-step framework:

Step 1: Define must-have capabilities

Permalink to “Step 1: Define must-have capabilities”Start by identifying the non‑negotiables for your environment by considering:

- Metadata scope

- Lineage depth

- Governance controls (RBAC, tags, classification, etc.)

- Business glossary

- Connector coverage

- Scalability and architecture

Step 2: Evaluate open-source health and community

Permalink to “Step 2: Evaluate open-source health and community”Shortlist catalogs with active communities, solid documentation, and frequent releases.

Score each catalog on:

- GitHub metrics: Stars, forks, contributors, release cadence.

- Community responsiveness: Activity on Slack, GitHub Issues, or mailing lists.

- Documentation and setup guides: Are they maintained and easy to follow?

- License type: Apache 2.0, MIT, or custom — this determines flexibility for enterprise deployment.

Step 3: Pilot in a high-impact domain/use case

Permalink to “Step 3: Pilot in a high-impact domain/use case”Pilot your top choice in a sandbox with one high‑value domain (e.g., customer, finance, or marketing) and use case.

Test discovery, search accuracy, lineage visualization, glossary tagging and ownership visibility.

Involve both technical (data engineers) and non‑technical (data stewards, analysts) users early to evaluate usability and adoption potential.

Step 4: Automate ingestion and policy enforcement

Permalink to “Step 4: Automate ingestion and policy enforcement”Automate metadata ingestion via tools like Airflow,dbt, Prefect or Dagster.

Implement tag‑driven or role-based access control so governance operates automatically rather than manually.

For advanced setups, connect catalogs to CI/CD pipelines or APIs for version-controlled metadata updates.

Step 5: Measure adoption, reliability, and ROI

Permalink to “Step 5: Measure adoption, reliability, and ROI”Track early adoption: search volume, active users, issue resolution speed. If results are strong, expand gradually. If not, reassess or test your runner-up tool.

Step 6: Plan for sustainability

Permalink to “Step 6: Plan for sustainability”Open source catalogs often require internal ownership.

Document:

- Time spent on setup, upgrades, and maintenance

- Custom code or connectors built internally

- Cost of infrastructure vs. potential managed alternatives

This documentation builds a baseline for future ROI comparisons if you ever consider transitioning to a managed or commercial metadata platform.

Here’s a supporting matrix to help you choose as per your use case:

| Use case | Recommended tool(s) | Reason |

|---|---|---|

| Discovery-first | OpenMetadata, DataHub | Strong search UX, broad connector support |

| Governance-heavy | Apache Atlas, DataHub | Tag propagation, RBAC, audit trails |

| Lineage-focused | Marquez, DataHub | OpenLineage compliance, column-level lineage |

| ML workflows | ODD, OpenMetadata | SageMaker/KubeFlow integrations, metadata observability |

| Hadoop ecosystem | Apache Atlas | Native Hive/HBase/Kafka hooks |

| Modern cloud-native | OpenMetadata, DataHub | Snowflake, BigQuery, dbt, Airflow connectors |

What are the trade-offs of choosing an open source data catalog?

Permalink to “What are the trade-offs of choosing an open source data catalog?”An open-source data catalog gives you control, but your team handles the work. You avoid license fees, keep full ownership, customize freely, and benefit from transparent code and active communities. But you must host, upgrade, and maintain it yourself — and long-term infrastructure and maintenance costs can quietly grow.

Here’s how the benefits and trade-offs map against each other, along with what you can do to mitigate the risks:

| Benefit | Trade-off | Mitigation strategy |

|---|---|---|

| No licensing fees | Infrastructure and personnel costs add up | Budget for 1-2 FTE engineers dedicated to catalog operations |

| Full customization | Custom code requires ongoing maintenance | Contribute upstream to reduce fork divergence |

| No vendor lock-in | Migration between tools is still complex | Use OpenLineage and open APIs for portability |

| Community innovation | Support is best-effort, not SLA-backed | Build internal expertise; engage actively in Slack/GitHub |

| Transparency (open code) | Security patches depend on community responsiveness | Monitor CVEs; maintain internal patching capability |

| Self-hosted control | You own uptime, scaling, and disaster recovery | Invest in infrastructure automation (Helm, Terraform) |

According to Gartner, poor data quality costs organizations an average of $12.9 million per year. Whether you choose open source or managed, the cost of doing nothing is higher than either option.

Should you go open source or managed?

Permalink to “Should you go open source or managed?”Answer these questions to make the right decision:

Q1. Do you have 2+ platform engineers who can dedicate 30%+ of their time to catalog operations?

- Yes: Continue to the next question.

- No: Open source will likely stall after the pilot. A managed platform provides production-grade metadata without the same staffing commitment. Evaluate Atlan or similar managed tools first.

Q2. Does your team have production experience operating Kafka, Elasticsearch, and Kubernetes?

- Yes: DataHub and OpenMetadata are viable. Continue.

- No, but we can learn: Budget 3–4 additional months for infrastructure learning curve on top of catalog deployment. Factor this into your timeline, honestly.

- No, and it’s not our focus: The infrastructure overhead will compete with your core engineering work. Consider a managed platform.

Q3. Is your primary need narrow (lineage only) or broad (discovery + governance + lineage + quality)?

- Lineage only: Marquez. Lightweight, fast to deploy, OpenLineage-native. Pair with a catalog later if needs expand.

- Broad: DataHub or OpenMetadata. But broad needs also mean broad maintenance. If you need all four capabilities in production within 90 days, a managed platform will get you there faster.

Q4. Is your data stack primarily Hadoop-based?

- Yes: Apache Atlas remains the strongest fit. Its governance depth in Hadoop environments is unmatched.

- No: Atlas will require significant custom work. Skip it.

Q5. Is ML metadata (models, experiments, feature stores) a primary concern?

- Yes: Evaluate ODD alongside OpenMetadata. Be prepared for a smaller community and potential custom collector work.

- No: Focus on DataHub or OpenMetadata for general-purpose cataloging.

Q6. Final check: What’s your acceptable time-to-value?

- Under 90 days: Managed platforms (like Atlan) are designed for this timeline. Open-source projects typically require 3–6 months to deliver production-ready value.

- 6+ months is fine: Open source is viable if you passed the staffing and infrastructure checks above.

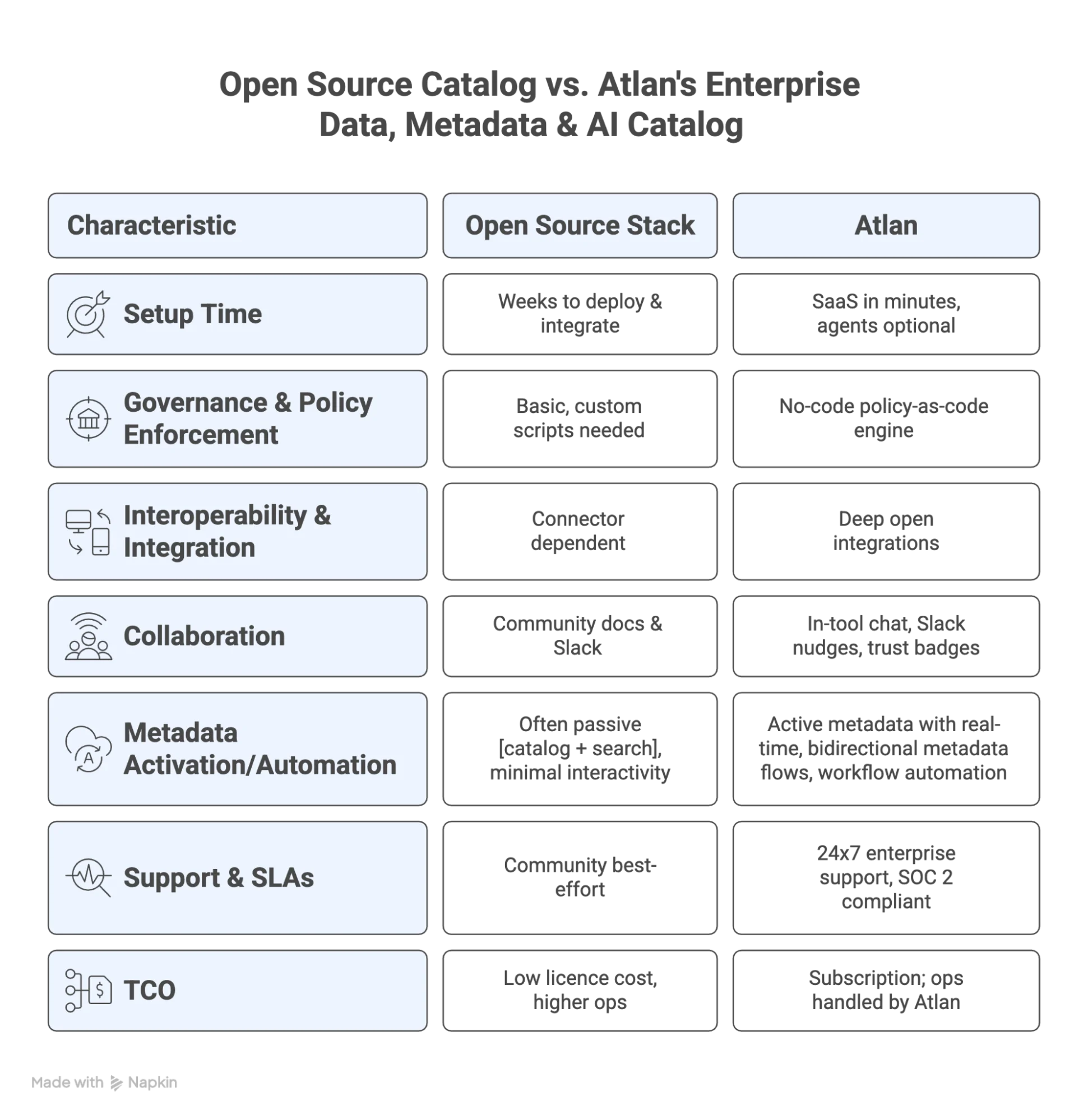

Open source vs. Atlan: A side-by-side snapshot

Permalink to “Open source vs. Atlan: A side-by-side snapshot”Open source catalogs work well for specific tasks like discovery, lineage, and search. They offer control and flexibility, but you usually have to build governance, integrations, and user workflows yourself. Atlan provides a unified control plane across data and AI tools, reducing integration effort so your team can focus on insights rather than managing systems.

| Dimension | Open source catalogs | Atlan |

|---|---|---|

| Deployment | Self-hosted; you manage infrastructure | Managed SaaS; Atlan handles operations |

| Connectors | Varies by tool | 100+ pre-built connectors |

| Governance | Built piecemeal across tools | Unified governance with automated policies |

| Lineage | Column-level available in DataHub, OpenMetadata, and Marquez | Column-level lineage across data and AI assets |

| Collaboration | Varies; some offer comments and glossaries | Embedded collaboration with Slack, Jira, and email integrations |

| AI governance | Limited or emerging | Purpose-built AI governance for model lineage and compliance |

| Time to value | Months of engineering effort | Weeks with pre-built workflows |

| Support | Community-driven (Slack, GitHub) | Enterprise SLA with dedicated support |

Companies like Nasdaq, Autodesk, General Motors, and Fox use Atlan to govern their data and AI programs at scale.

Discover how a modern data catalog drives real results

Book a Personalized Demo →

Open source vs. Atlan: Side-by-side snapshot in 2026. Source: Atlan.

FAQs about open source data catalog tools

Permalink to “FAQs about open source data catalog tools”1. What is an open source data catalog?

An open source data catalog is a free, community-maintained tool that helps organizations discover, document, and manage metadata across their data ecosystem. These tools provide searchable metadata, lineage tracking, business glossaries, and extensibility through APIs. You self-host them for full control over customization and security.

2. How can an open source data catalog improve data discovery?

Open source catalogs centralize metadata from disparate systems into a single searchable interface. This reduces the time data teams spend finding and understanding assets.

3. What are the benefits of using an open source data catalog for data governance?

Open source catalogs enable classification, tagging, ownership assignment, and policy enforcement without licensing costs. Tools like Apache Atlas and DataHub offer fine-grained access controls and audit trails.

4. How do I choose the right open source data catalog for my organization?

Match the tool to your stack and team capabilities. If you run Hadoop, Atlas is the best fit. In modern cloud-native environments, DataHub or OpenMetadata is a stronger choice. Prioritize connector coverage, lineage depth, and governance controls. Run a 30-day pilot in one business domain before committing.

5. What features should I look for in an open source data catalog?

Focus on metadata coverage across your existing tools, column-level lineage, governance and access control features, search and discovery UX, API extensibility, and community health.

6. What is the difference between open source and commercial data catalogs?

Open-source catalogs offer customization and no licensing fees but require infrastructure management, connector development, and ongoing maintenance. Commercial catalogs provide enterprise support, faster deployment, pre-built integrations, and managed infrastructure. Organizations with dedicated platform engineering teams often succeed with open source. Those prioritizing time-to-value and reducing operational overhead typically choose commercial solutions.

7. How much engineering effort does deploying an open source catalog require?

Plan for three to six months of initial deployment, depending on complexity. This includes infrastructure setup, connector configuration, metadata ingestion pipelines, and user onboarding. Ongoing maintenance requires dedicated DevOps time. Organizations typically allocate one to two full-time engineers for catalog operations at scale.

8. Can open source catalogs handle enterprise-scale deployments?

Yes. Tools like DataHub and OpenMetadata scale to enterprise volumes when properly architected. LinkedIn, Netflix, and Uber run open source catalogs at massive scale. Smaller organizations may find managed platforms more cost-effective.

9. When should I choose Atlan over open source alternatives?

Consider Atlan when you need unified governance across data and AI, faster time to value, or when your platform engineering capacity is limited. Many organizations start with open source and transition to managed platforms as governance complexity grows.

10. What is column-level lineage, and which open source catalogs support it?

Column-level lineage tracks individual field transformations through your data pipeline, showing how specific columns change from source to destination. This granularity enables precise impact analysis and debugging. DataHub, OpenMetadata, and Marquez offer column-level lineage capabilities.

Ready to move from DIY scripts to modern, automated data cataloging?

Permalink to “Ready to move from DIY scripts to modern, automated data cataloging?”Open source data catalog software has matured significantly. But maturity has limits. Every open-source catalog on this list requires engineering investment for deployment, maintenance, and feature development.

If your team has the platform engineering muscle, open source gives you control and flexibility. If you are hitting the walls of maintenance overhead, connector gaps, or governance complexity, a managed platform like Atlan helps accelerate your program without sacrificing depth.

Discover how a modern data catalog drives real results

Share this article

Open source data catalog: Related reads

Permalink to “Open source data catalog: Related reads”- Data Catalog: What It Is & How It Drives Business Value

- 7 Popular open-source ETL tools

- 5 Popular open-source data lineage tools

- 5 Popular open-source data orchestration tools

- 7 Popular open-source data governance tools

- 11 Top data masking tools

- 9 Best data discovery tools

- What Is a Metadata Catalog? - Basics & Use Cases

- Modern Data Catalog: What They Are, How They’ve Changed, Where They’re Going

- 5 Main Benefits of Data Catalog & Why Do You Need It?

- Enterprise Data Catalogs: Attributes, Capabilities, Use Cases & Business Value

- The Top 11 Data Catalog Use Cases with Examples

- 15 Essential Features of Data Catalogs To Look For in 2025

- Data Catalog vs. Data Warehouse: Differences, and How They Work Together?

- Snowflake Data Catalog: Importance, Benefits, Native Capabilities & Evaluation Guide

- Data Catalog vs. Data Lineage: Differences, Use Cases, and Evolution of Available Solutions

- Data Catalogs in 2025: Features, Business Value, Use Cases

- AI Data Catalog: Exploring the Possibilities That Artificial Intelligence Brings to Your Metadata Applications & Data Interactions

- Amundsen Data Catalog: Understanding Architecture, Features, Ways to Install & More

- Machine Learning Data Catalog: Evolution, Benefits, Business Impacts and Use Cases in 2025

- 7 Data Catalog Capabilities That Can Unlock Business Value for Modern Enterprises

- Data Catalog Architecture: Insights into Key Components, Integrations, and Open Source Examples

- Data Catalog Market: Current State and Top Trends in 2025

- Build vs. Buy Data Catalog: What Should Factor Into Your Decision Making?

- How to Set Up a Data Catalog for Snowflake? (2025 Guide)

- Data Catalog Pricing: Understanding What You’re Paying For

- Data Catalog Comparison: 6 Fundamental Factors to Consider

- Alation Data Catalog: Is it Right for Your Modern Business Needs?

- Collibra Data Catalog: Is It a Viable Option for Businesses Navigating the Evolving Data Landscape?

- Informatica Data Catalog Pricing: Estimate the Total Cost of Ownership

- Informatica Data Catalog Alternatives? 6 Reasons Why Top Data Teams Prefer Atlan

- Data Catalog Implementation Plan: 10 Steps to Follow, Common Roadblocks & Solutions

- Data Catalog Demo 101: What to Expect, Questions to Ask, and More

- Data Mesh Catalog: Manage Federated Domains, Curate Data Products, and Unlock Your Data Mesh

- Best Data Catalog: How to Find a Tool That Grows With Your Business

- How to Build a Data Catalog: An 8-Step Guide to Get You Started

- The Forrester Wave™: Enterprise Data Catalogs, Q3 2024 | Available Now

- How to Pick the Best Enterprise Data Catalog? Experts Recommend These 11 Key Criteria for Your Evaluation Checklist

- Collibra Pricing: Will It Deliver a Return on Investment?

- OpenMetadata vs. DataHub: Compare Architecture, Capabilities, Integrations & More

- Automated Data Catalog: What Is It and How Does It Simplify Metadata Management, Data Lineage, Governance, and More

- Data Mesh Setup and Implementation - An Ultimate Guide

- What is Active Metadata? Your 101 Guide